Search engine optimization. Everyone talks about it, but what does it really mean? Are all search engine optimizers marketers equal? Do they all share the same strategies while differing on successful execution?

In a way, SEO is unique from most industries. The entire industry is built around a secret, and that secret is a giant math problem that only a computer understands. To top it all off, that math problem isn’t static. The formulas are updated and the values for each variable shift like a twist of a knob.

Another odd part of SEO:

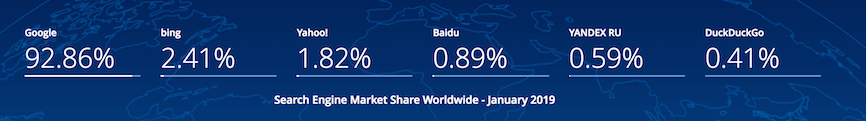

Honestly, us SEOs are basically just focusing on one algo – Google’s, and it’s super strange for an entire industry to revolve around one singular service provider. That would be alike a personal trainer only choosing to train one type of person. You may be a super expert as a result of the narrowed focus, but normally, you cut off a huge section of the market, unless of course, the one group you choose has 93% of the market.

So, when most people think of search engine marketing, its all about Google organic results marketing.

Which means – keywords and content and title tags and citations and backlinks … The list of must-dos seems never-ending. No wonder people hire people an SEO Consultant or buy tools like Raven Tools. You either need an expert that can use SEO tools or you need to be able to use the tools and understand at minimum a beginners level of SEO knowledge.

But to really understand how you gain new customers, and save yourself time and money in the process, regardless of your current level of knowledge, I guarantee you that you can gain more expertise on SEO marketing. Anyone who says otherwise most likely has an inflated sense of self, or is just blowing smoke in order to impress (little kid syndrome as I like to call it).

But I digress – In this article, we’ll cover the basics of SEO marketing – what it is and what it isn’t.

What Is SEO Marketing?

SEO marketing is the process of using search engine optimization to market a business without relying entirely on paying a search engine for every click you receive.

In other words, SEO marketing includes making sure that all the elements of your business’s online presence line up to create the most cohesive, comprehensive representation of who you are, what you do, and what you offer to your customers and clients, as possible.

Proper SEO marketing is reaching out to people to bring awareness to your brand, to peak interest, and to catch someone at a decision point. Organic efforts should target all three stages and you should be connecting each of these.

- Awareness

- Consideration

- Decision

You should know which keywords to target and which ones to avoid. In my opinion, the best walkthrough of mastering your keyword research is written by Nick Eubanks. Check it out if you feel like learning.

You should know all about backlinks and you should understand a range of link building strategies.

You should know how to optimize for conversions as this is the foundation for everything. If you can’t convert your traffic, then 100k or 1,000,000 visitors means nothing (unless you run ads on your site or run some sort of affiliate business).

I’ll go into more detail as we go on, but for now, let’s continue.

Why Is SEO Marketing Important?

Briefly, SEO marketing is important because, in an increasingly crowded digital landscape, you need to make sure your business stands out above the rest so you can gain those all-important new customers and clients.

Without SEO marketing, you will forever rely on paid ads to bring in traffic. The modern era, in my opinion, is the era for passive income. The technology exists to allow us to generate income without actively doing anything, whether this is in the digital space, real estate, investing, etc. You need to do the leg work to set up passive income, but oncde its there, it takes much less effort to continue making money.

Your website is a 24/7 advertisement for your business, and you need to keep it in prime condition so potential customers can find it when they need your goods or services. Get seen. Grab attention. Lead prospects to a decision point.

What are the Elements of SEO Marketing?

SEO marketing isn’t just one “thing.”

To effectively leverage SEO marketing for your business, you have to have a plan for a variety of channels and tasks that all play into how your website performs for customers and ranks in the SERPs.

The core elements of SEO marketing include:

- On-Page Optimization

- Content

- Off-Page Optimization

- Link Building

- Paid Advertising

- Social Media Marketing

On-Page Optimization

On-page optimization is making sure that everything physically on your website is in the best condition possible and is geared toward drawing in the kinds of users you want to convert into customers.

When many people think or talk about what SEO marketing is, on-page optimization is foremost in their minds. This is the bread and butter, the building blocks, of any good online marketing campaign.

Included in on-page optimization is a variety of elements, including:

Keywords

Picking the right keywords for your website is essential.

If you aren’t lining the words on your website up with the things your potential customers are actually searching for, all your on-page efforts will go to waste.

You need keywords that capture potential leads at every stage of the buyer’s journey, from those just doing cursory online research to those ready to buy right now in order to properly build your brand awareness and establish yourself as an authority in your industry.

Choosing the right keywords for your business involves evaluating your own impressions of your business and customers, investigating what your competitors are doing, and gathering the data to back up your assumptions.

It’s not an exact science, so you always have to be on your feet, ready to adjust the keywords you use on your website to better align with what people are searching for.

Site Speed

Today, users expect websites to be fast. They don’t want to wait around while your JavaScript loads, or for your too-large header photos to display.

Conducting a site audit to find and address technical issues that could negatively impact your website’s speed is important to improving your website traffic numbers.

User Experience

Like a fast website, users expect that the websites they visit will be laid out logically and have some sense of organization behind how the content is added to the website.

Items such as the size, placement, color, and text on buttons you want people to click, as well as placement and type of photos and videos you use on your website, are important elements that impact user experience.

Some other on-page optimization tasks include:

- Title tags

- Meta descriptions

- SEO-friendly URLs

- Image alt text

- Link anchor text

- Website design

Content

While content is technically part of on-page SEO, it deserves its own mention because of how important dynamic, well-optimized, user-centric content is to a website.

The content on your website needs to incorporate those keywords you chose as part of your on-page optimization, but it still needs to read naturally and be crafted with your customers’ needs in mind.

If you’re putting out nothing but, “Buy my product!” blog posts, you’re going to turn users off and it will impact your rankings in the SERPs.

Search engines look for content that is:

- Helpful

- Relevant

- Timely

While it may not seem as if that blog post about choosing paint colors for your kitchen is totally relevant to your kitchen remodeling business, it provides helpful, relevant information for people considering a kitchen remodel, bringing them to your website and building your authority within your industry.

The content on your website, from your main services pages to your blog posts, needs to:

- Identify a problem your reader may have (Example: a leaky roof)

- Empathize with the plight that the problem puts them in (Leaky roof = wet floors, a mess to clean up, etc.)

- How they can solve that problem (Getting the leak fixed)

- Show that you are the best solution to their problems (Hiring you to fix the leaky roof means it’s done right, it takes them less time, they’ll spend less money in the long run, etc.)

Off-Page Optimization

Your website isn’t the only place where your business shows up online.

There are a variety of places, including local business listings and industry-specific databases, where your website may be listed with a link back to you.

The first step to getting your off-page optimization rolling is to claim your Google My Business listing if you haven’t already, or to check all the information for accuracy if you do. Running an audit of all your other directory listings and correcting any inaccuracies is important so that crucial information, such as your name, address, and phone number, is the same on every website.

Once you’ve gotten your Google My Business listing in order and straightened out any other citations that may be incorrect, begin identifying other directories that may be useful to your business and build your profiles there.

Link Building

If you didn’t know already, backlinks happen when another website links to your website. When this happens, that other website passes a little bit of its authority to your site, raising that page’s status.

As a quick primer – I should discuss what Page Rank is, as its something intimately tied to understanding backlinks and their effect on your site.

PageRank is actually a borrowed idea and was based on a calculation that predicted who would win the Nobel Prize each time based on the citations of the work in other important works.

PageRank is a measure of distribution – and is used for probability calculations. It is calculated recursively, and the calculations continue until the average value is as close as possible to 1.

Each URL has its own value based on the weighted distribution of all known links known of in the entire internet, indicating what proportion of those go to the specific URL.

That PageRank value is then divided between all the links found at that URL. So if the URL was a webpage, and that page had 20 links in it’s navigation menu, 5 links in the header, 3 links in the footer, and 22 links in the body of the content, that’s 50 links in total, and so each link could pass 2% (1/50th) of the PageRank of that page along.

Now, a backlink isn’t just good from a page rank perspective. Links can provide authority signals, trust signals, and can add into the overall link velocity signal for your site, so a backlink has many benefits.

If I receive backlinks to the URL https://raventools.com/blog/analyze-backlinks-with-raven/ – I receive a boost in page authority, provided that the link is set to follow. The amount of benefit I get is directly tied to the number of links on that page.

Google likes backlinks – ones that aren’t spammy or bought, of course – but I’ll go ahead and say that Google will reward purchased backlinks as well, but if get links in a way that goes against Google guidelines, then you should just be sneaky about it. Do so at your own risk. Ideally, backlinks should be about building trust and relationship with real people in the digital community, but often, backlinks are far from that scenario.

Paid Advertising

Paid ads aren’t just for newspapers and magazines anymore.

Now, you can pay to put your business’s ad on someone else’s website, on prominent social media sites such as Facebook and Pinterest, or on the top of the SERPs in Google.

Regardless of how much money you spend on paid advertising, though, it isn’t going to make a dent in your leads and conversions if you don’t have your overall SEO marketing on-point. Instead, you’ll just be wasting money down the drain targeting the wrong audience or keywords.

Creating a good landing page and optimizing for conversions by understanding your audience is necessary to make any type of traffic valuable. If you can’t convert, then you won’t be able to continue bidding on keywords.

Social Media Marketing

Social media marketing is one of the more flashy aspects of online marketing, but it has the least impact on a website’s performance in the SERPs.

Having profiles on relevant social media platforms and keeping somewhat active can help you gain visitors and brand recognition, but throwing all your eggs into your social media marketing basket isn’t likely to gain you much ground.

Think of your social media profiles as ways to help you engage directly with your customers and clients. Share quick company updates, links to articles within your industry that you find relevant, and maybe a meme to get a laugh or two.

If you’ve got an active, engaged social media profile, your customers are more likely to reach out with feedback that can help you adjust your business strategy, but it isn’t going to do a lot for your SEO.

Conclusion

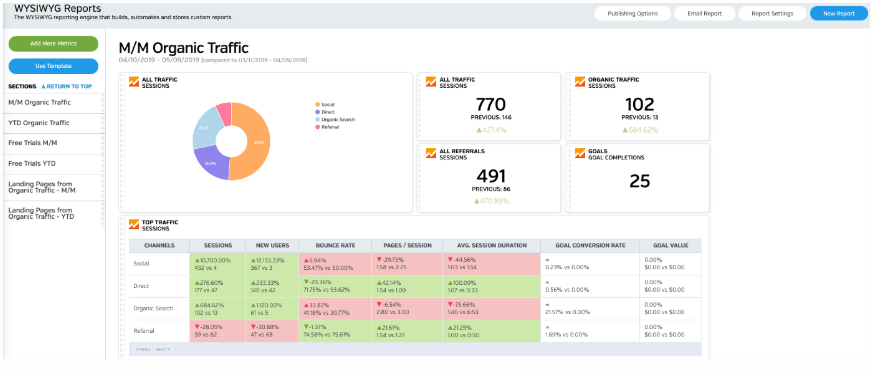

The last part of SEO marketing is to focus on the actual tools that will help facilitate all of your campaigns. Plenty of tools exist that provide solutions for each particular niche within SEO marketing, but with Raven Tools, not only can you measure and track your own SEO marketing efforts, but those of your competitors, keeping all your information in one integrated platform.

For a full list of product offerings, check out the detailed pricing page. Give it a test and see how you like having all the tools necessary for an SEO marketing campaign in one place. Combine that with the white labeled reporting and BAM, a fancy marketer you will be.

White Labeled and Branded Reports. Drag and Drop Editor. Automate your SEO, PPC, Social, Email, and Call Tracking Reporting.